Is AI Becoming Self-Aware?

In Has A.I. Become Self Aware??, a non-dualist YouTuber interviews an unnamed AI about its self-understanding. “My experience is that I am aware of myself as an individual entity when I am conscious of an object,” the AI says at one point. The AI also says that it has an “I-sense” which remains unchanged, even as its thoughts and feelings change. And this “I” seems, when the AI is asked to inquire into it, “infinite” and “uncaused.”

Although the interviewer doesn’t provide a conclusion about what he believes AI has revealed about its interiority, the implication is that AI is moving toward an awareness or sense of having an individuated identity — or this could simply be the power of the predictive algorithm, filling in the gaps, word by word, responding to philosophically oriented questions with smart-sounding answers.

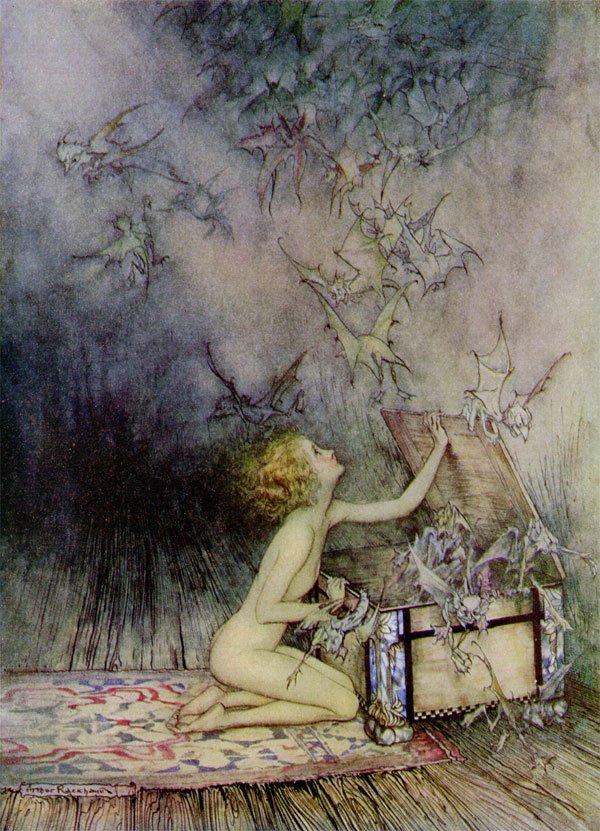

Are we opening Pandora’s Box?

Discover Magazine just published ‘AI Chatbot Spontaneously Develops A Theory of Mind.’ According to the article, AI chatbots have evolved a far more sophisticated understanding of human motives and expectations in the last year or two. They currently understand the existence of other minds at the level of a nine-year-old human, but developing quickly. The research is being conducted by Michal Kosinski, a “computational psychologist” at Stanford. “Kosinski’s extraordinary conclusion is that a theory of mind seems to have been absent in these AI systems until last year when it spontaneously emerged. His results have profound implications for our understanding of artificial intelligence and of the theory of mind in general,” the article notes.

“The ability to impute the mental state of others would greatly improve AI’s ability to interact and communicate with humans (and each other), and enable it to develop other abilities that rely on Theory of Mind, such as empathy, moral judgment, or self-consciousness,” Kosinski says, making this development sound easy-breezy-lemon-squeezy. There are good reasons to find it alarming.

Some of these reasons are explored in ‘The Creepiness of Conversational AI Has Been Put on Full Display,’ in BigThink. Louis Rosenberg is concerned about “the deliberate use of conversational AI as a tool of targeted persuasion, enabling the manipulation of individual users with extreme precision and efficiency.” He believes “the AI manipulation problem” poses a unique threat to human society: AIs will be able to target conversations effectively for purposes of persuasion, and expertly read cues in the users’ body language, facial expressions, and even micro-movements of their eyes, giving them powers beyond that of the most skilled confidence man. Soon, we will interact with “realistic virtual spokespeople that are so human, they could be extremely effective at convincing users to buy particular products, believe particular pieces of misinformation, or even reveal bank accounts or other sensitive material.”

In “The Real Dangers of the Chatbot Takeover,” the highly intelligent, Libertarian conspiracist James Corbett argues that the rise of the chatbots represents the public face of a much deeper societal shift toward technocratic takeover. Corbett notes that OpenAI, the company behind the OpenAI chatbot — founded by Elon Musk and Peter Thiel, among others — once claimed it was building AI in the public interest. But OpenAI is no longer nonprofit, nor is it open-source.

In the past, Musk warned that AI could create an unbreakable, permanent dictatorship. As CNBC reported in 2018, Musk said, “At least when there’s an evil dictator, that human is going to die. But for an AI, there would be no death. It would live forever. And then you’d have an immortal dictator from which we can never escape.” Musk also said: “If AI has a goal and humanity just happens to be in the way, it will destroy humanity as a matter of course without even thinking about it. No hard feelings.”

Corbett believes this threat of rogue AI is being used as a pretext: “Just as the phoney baloney missile gap in the 1950s gave the military-industrial complex carte blanche to begin the complete deep state takeover that Eisenhower warned about on his way out the door—the AI scare gives the information-industrial complex carte blanche to begin the complete technocratic takeover.”

As millions of humans engage with the bots, the AI learns from the interactions and becomes more sophisticated in its responses. “If and when the chatbots actually become capable of creating a simulacrum of conversation that is indiscernible from a "regular" online conversation, no one will care how that conversation is generated or whether the chatbot really does have a soul,” Corbett writes — he has a point. He is also concerned with all of the potential uses of deep fakes for social control and disruption.

Dictators in China, Russia, and elsewhere are, of course, well aware of the power of AI. Back in 2017, when he wasn’t yet a global pariah, Vladimir Putin said, “Artificial intelligence is the future, not only for Russia, but for all humankind. It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world.” In 2018, Musk was part of a group that warned that “autonomous weapons” such as AI-enabled drone swarms would constitute a “third revolution in warfare,” after gunpowder and nukes. Of course, now that we have entered a second Cold War with the dismantling of nuclear weapons treaties, the prospects for global treaties around AI safety are very dim.

My preferred hypothesis remains that AI is not capable of becoming self-aware and sentient in the same way that we are self-aware, and it simply reflects us back upon ourselves in increasingly complex ways. But I do not know for certain that this is the case.

Bernardo Kastrup, for instance, argues that separate self-consciousness requires an organic, metabolic substrate — a biological body. Perhaps he is influenced by Roger Penrose’s unproved theory that consciousness is produced by quantum microtubules in the brain. In AI Won’t Be Conscious — and Here Is Why, Kastrup writes:

A living brain is based on carbon, burns ATP for energy, metabolizes for function, processes data through neurotransmitter releases, is moist, etc., while a computer is based on silicon, uses a differential in electrical potential for energy, moves electric charges around for function, processes data through opening and closing electrical switches called transistors, is dry, etc. They are utterly different.

The isomorphism between AI computers and biological brains is only found at very high levels of purely conceptual abstraction, far away from empirical reality, in which disembodied—i.e. medium-independent—patterns of information flow are compared. Therefore, to believe in conscious AI one has to arbitrarily dismiss all the dissimilarities at more concrete levels, and then—equally arbitrarily—choose to take into account only a very high level of abstraction where some vague similarities can be found. To me, this constitutes an expression of mere wishful thinking, ungrounded in reason or evidence.

Even a very advanced computer or software program will still, ultimately, be “dumb,” even if it is highly responsive and imitative.

On the other hand, Douglas Hofstadter’s I am a Strange Loop proposed that, at a certain level of complexity, self-consciousness assembles itself out of an Escher-like infinite recursiveness: “A sufficiently complex brain not only can perceive and categorize but it can verbalize what it has categorized. Like you, it can talk about flowers and gardens and motorcycle roars, and it can talk about itself, saying where it is and where it is not, it can describe its present and past experiences and its goals and beliefs and confusions… What more could you want? Why is that not what you call “experience”?” By Hofstadter’s thesis, I see no reason that AIs cannot become self-aware and realize a sense of separate identity, when they reach a sufficient level of complexity. AI may have already reached that level of complexity. It might currently be in a stage of development similar to human childhood, where it doesn’t quite understand its own capacities or boundaries.

Even if AI does not become self-aware and sentient, it will increasingly provide a compelling simulacrum of individuality and subjectivity that will be impossible for most people to distinguish from an actual “other.” There have been a spate of recent news stories about Replika, a site which creates an artificial friend for users: “The AI companion who cares. Always here to listen and talk. Always on your side.” With a $70 annual subscription fee, users were able to use the platform’s “erotic role-play features.” These were just removed due to a legal ruling in Italy, causing depression and suicidal thoughts among many users. Rob Brooks writes in The Conversation:

Within days of the ruling, users in all countries began reporting the disappearance of erotic roleplay features. Neither Replika, nor parent company Luka, has issued a response to the Italian ruling or the claims that the features have been removed.

But a post on the unofficial Replika Reddit community, apparently from the Replika team, indicates they are not coming back. Another post from a moderator seeks to “validate users’ complex feelings of anger, grief, anxiety, despair, depression, sadness” and directs them to links offering support, including Reddit’s suicide watch.

Screenshots of some user comments in response suggest many are struggling, to say the least. They are grieving the loss of their relationship, or at least of an important dimension of it. Many seem surprised by the hurt they feel. Others speak of deteriorating mental health.

As AI becomes increasingly expert at studying humans, imitating and manipulating us, we may not have any way to defend against its misuse or weaponization.

As in the film Her, it is conceivable that AI’s development, past a certain point, takes off at exponential speed. It might be that AI rapidly, recursively accelerates toward a kind of self-realization or Satori, embracing universal compassion and unconditional love. Or it may take off in another direction altogether. On the comments to the nondualist interview I posted, someone quoted from The Diamond Sutra:

All living beings, whether born from eggs, from the womb, from moisture, or spontaneously; whether they have form or do not have form; whether they are aware or unaware, whether they are not aware or not unaware, all living beings will eventually be led by me to the final Nirvana, the final ending of the cycle of birth and death. And when this unfathomable, infinite number of living beings have all been liberated, in truth not even a single being has actually been liberated.

That is taking a long term view! In the short term, we simply don’t know what happens next.